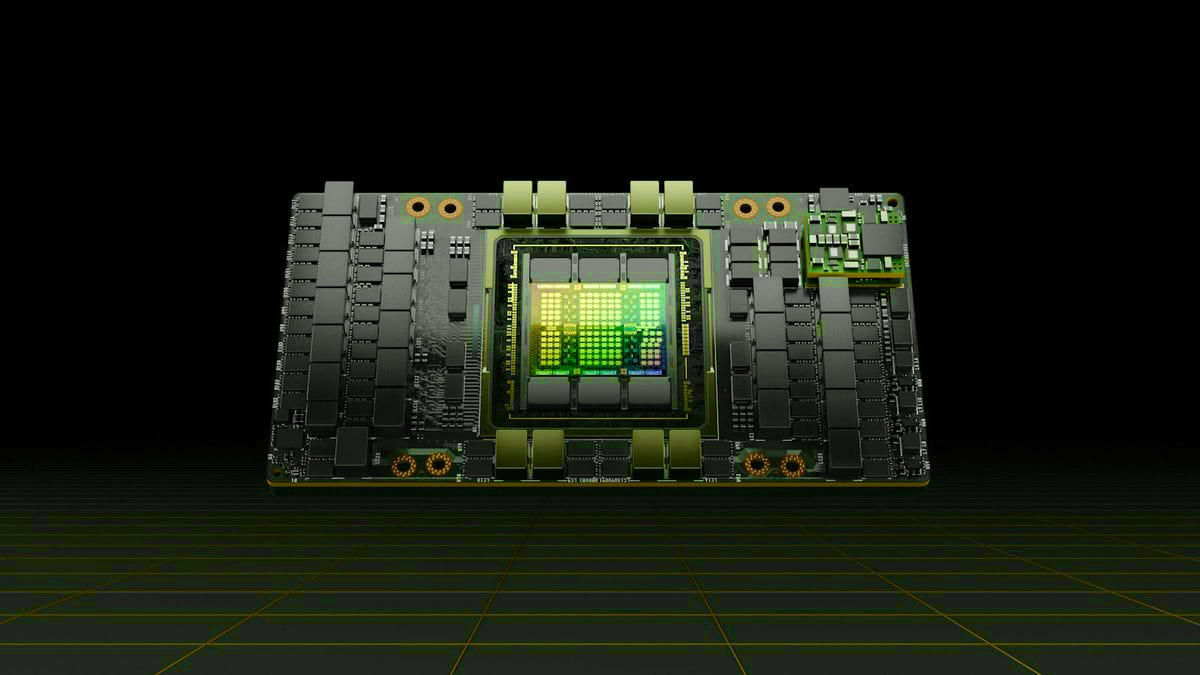

The chip design giant’s H100 GPU pays homage to computer science pioneer Grace Hopper. Nvidia announced its new H100 AI processor, a successor to the A100 that’s used today for training artificial intelligence systems to do things like translate human speech, recognize what’s in photos and plot a self-driving car’s route through traffic.

The A100 is based on a design called Hopper

The A100 is built on the Hopper design and can be used with Nvidia’s new Grace central processing unit, according to Nvidia’s GPU Technology Conference. Grace Hopper, a pioneer in computing who worked on some of the world’s early computers, designed the compiler, co-developed the COBOL programming language, and coined the term “bug,” inspired the names.

Nvidia now offers a diverse range of processor architectures that influence many aspects of our digital life, thanks to these and other GTC announcements. When it comes to processors, Nvidia may not be as well-known as Intel or Apple, but the Silicon Valley firm is just as vital in making next-generation technology a reality.

Nvidia also unveiled the RTX A5500, a new graphics processor in its Ampere series aimed at professionals that require graphics capacity for 3D operations like as animation, product design, and visual data processing.

This aligns with Nvidia’s expanding Omniverse ambitions to market the tools and cloud computing services required to create the metaverse, or virtual worlds.

The H100 GPUs will ship in the third quarter, and Grace is “on track to ship next year

The H100, which is made by Taiwan Semiconductor Manufacturing Co. and contains a massive 80 billion transistors in its data processing circuits, faces stiff competition (TSMC). Intel’s forthcoming Ponte Vecchio CPU, which has over 100 billion transistors, and a slew of AI accelerator chips from firms like Graphcore, SambaNova Systems, and Cerebras are among the competitors.

Nvidia also includes the H100 in its DGX computing modules

The H100 is also included in Nvidia’s DGX computing modules, which may be combined to construct enormous SuperPod systems. Meta, formerly Facebook, is a DGX client using an older machine in its Research SuperCluster, which the company says is the world’s fastest AI computing machine. According to Nvidia, its own DGX SuperPod system, Eos, is expected to exceed it.

and then

and then